In this quick post I want to take a quick look at how Image Keyboard Support works and how we can add it into our applications.

When it comes to IKS there are two key functionalities that it provides:

- The ability for applications to be able to retrieve rich content from keyboard

- The abilities for keyboards to send rich content to applications

Whilst your implementation may not care for both of these, it shows that there are two key parts here when it comes to IMEs that support rich content. The application needs to explicit declare support for rich content from IMEs, whilst the IME itself needs to actually be able to provide the content. So whilst your application might support rich content, the IME that is being used might not be configured to provide this content to you, and vice versa.

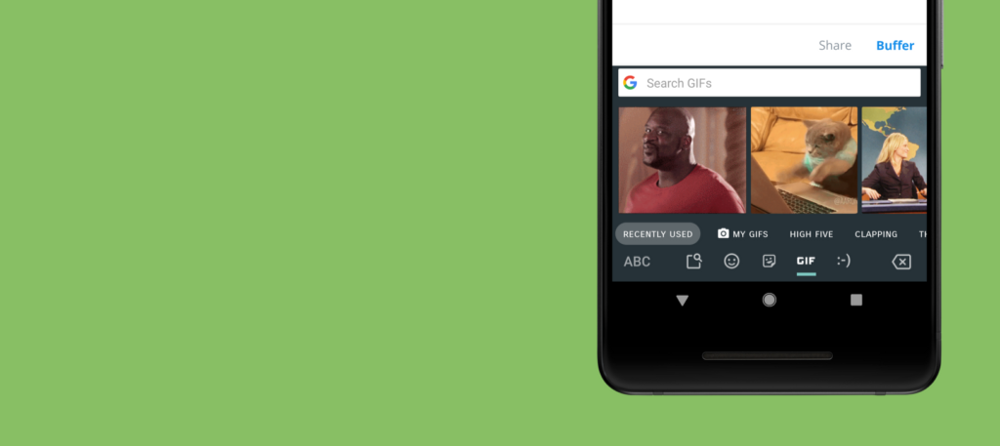

So for example, let’s say you have an application which allows the user to input content via an IME. The user will tap on that EditText (or it will automatically gain focus) the EditText will send a list of support MIME types (in the form of contentMimeTypes) so that the IME is aware of what rich content can be provided to the EditText.

Next the IME will use this list of support content types to decide which rich content can be displayed for selection by the user. At this point the user will be able to select an image from the keyboard, which is when the IME will commit the content (using commitContent()). Your application will then receive an instance of the InputContentInfo class which it can then use to retrieve the selected media item from (once it has requested permission to do so.

To be able to support this within our applications we need to make a small tweak to our EditText which we wish to allow rich content input from. This essentially involves overriding the onCreateInputConnection() function of the EditText class so that our application is able to setup the content types that in can receive and listen for the data of these types that the IME commits to be sent.

Let’s take a little look at what this consists of:

https://gist.github.com/hitherejoe/b6b759bbbde9f6c41e7f16a1727a1a4a

Let’s break down what it is we’re doing here:

- On line 2 we begin by retrieving an instance of an InputConnection — this is an interface which will be used to handle the communication between the input method and our application. We only need this instance reference so that we can pass it back as part of the wrapper creating at the end of this function.

- Next we use the EditorInfoCompat to create a collection of mime types that our EditText will support. Here I have just declared that we support GIFs and PNGs, but this will likely vary on the application that is implementing this feature.

- Now that we have the types of media defined, we need to create an instance of the callback that will receive these rich content responses from user input — for this we’re going to define a new OnCommitContentListener. Within this callback we then continue to handle the responses that we get back from the IME.

- We first check that the device is running at least API version 25 (7.1) and that our application has the INPUT_CONTENT_GRANT_READ_URI_PERMISSION permission granted. Note: At this point if you do not have this permission granted then you will need to request it and then continue the operation. I haven’t included this here to keep the code sample simpler.

- Next we request permission to access the URI of the content that we have just received. This is only a temporary grant, but gives us read-only access so that we can retrieve the media item that the user has selected.

- Now that we have permission to access the content we can use the contentUri on our InputContentInfo instance, this is the location at which the selected item is accessible from. You can use this URI to display on screen, upload to your server or do anything else with it inside of your application. In the example above we use a listener to pass it back to the parent class. Remember you only have temporary access to this URI, so it’s best practice not to persist this URI to use another time.

- We first check that the device is running at least API version 25 (7.1) and that our application has the INPUT_CONTENT_GRANT_READ_URI_PERMISSION permission granted. Note: At this point if you do not have this permission granted then you will need to request it and then continue the operation. I haven’t included this here to keep the code sample simpler.

- Next we request permission to access the URI of the content that we have just received. This is only a temporary grant, but gives us read-only access so that we can retrieve the media item that the user has selected.

- Now that we have permission to access the content we can use the contentUri on our InputContentInfo instance, this is the location at which the selected item is accessible from. You can use this URI to display on screen, upload to your server or do anything else with it inside of your application. In the example above we use a listener to pass it back to the parent class. Remember you only have temporary access to this URI, so it’s best practice not to persist this URI to use another time.

- Finally we return an InputConnectionCompat instance using the createWrapper() function that it provides. This essentially takes our existing InputConnection, our created OnCommitContentListenerand wraps them both along with our custom EditorInfo instance.

That’s all that is required to add native Image Keyboard Support to your application, allowing you to seamlessly provide media input directly from supporting IMEs. Currently this is only supported from API version 25, but who knows what may change in the future for support of this. Are you using this feature already, or do you have any questions on getting this implemented in your application? Feel free to reach out if so 🙂