With the announcement of the first Android 12 Developer Preview announced yesterday, I started to take a dive into some of the API changes and the new things we’ll be getting our hands on. One of these things was the new unified rich content API, providing a simplified way of transferring media between applications. You may have previously used Image Keyboard Support when using or developing Android Applications, which allowed you to insert media into applications directly from the keyboard.

The new unified media API takes this to a completely new level. Not only can you use it to insert media using the keyboard, but apps will also be able support pasting images directly from the paste menu, as well as media input via drag and drop support. With media being a huge part of applications, this change greatly reduces the friction when it comes to transferring media across applications.

With this new feature available to play with, in this blog post we’ll explore this new functionality – looking at how the API works and how we can implement it into applications targeting Android 12.

Note: To be able to implement this fucntionality, you’ll need the following dependencies in your project that is targeting Android 12.

implementation 'androidx.core:core-ktx:1.5.0-beta01'

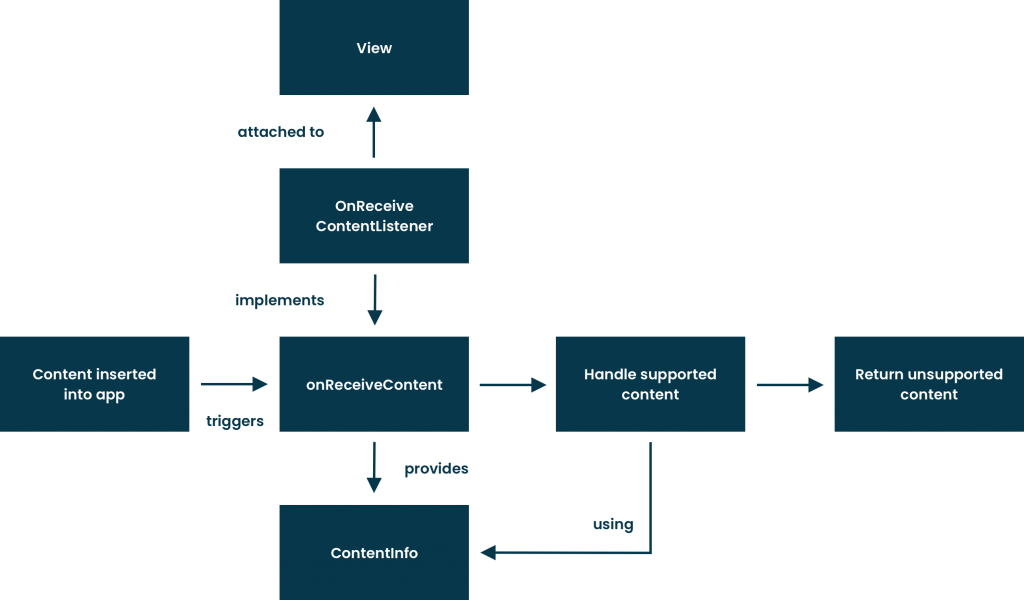

implementation 'androidx.appcompat:appcompat:1.3.0-beta01'When it comes to the unified rich media API, the most important addition is the OnReceiveContentListener – this listener can be implemented and then applied to a view reference to receive content input events. When the support media types are detected by the listener, the content is received in the form of a ContentInfo reference which can then be used to handle the supplied media.

So, there’s a couple of things happening here. Let’s take a quick walk through the requirements and flow here.

- For views that want to support rich media input, they will attach a reference to the OnReceiveContentListener

- When media is inserted into the supporting view, the OnReceiveContentListener will be called and its onReceiveContent function will be triggered.

- When this function is called, an instance of a ContentInfo will be received. This contains all of the required details around the attached media.

- Once the attached media that the app supports has been handled, any unhandled media should be returned from the function so that other handlers can utilise that media.

With that in mind, let’s take a look at how we could implement this inside of our app. We’ll start by creating a new class that implements this OnReceiveContentListener. When you do this, you’ll be prompted with the requirement of a function implementation, this being the onReceiveContent callback.

class ContentReceiver: OnReceiveContentListener {

override fun onReceiveContent(

view: View,

contentInfo: ContentInfoCompat

): ContentInfoCompat? {

}

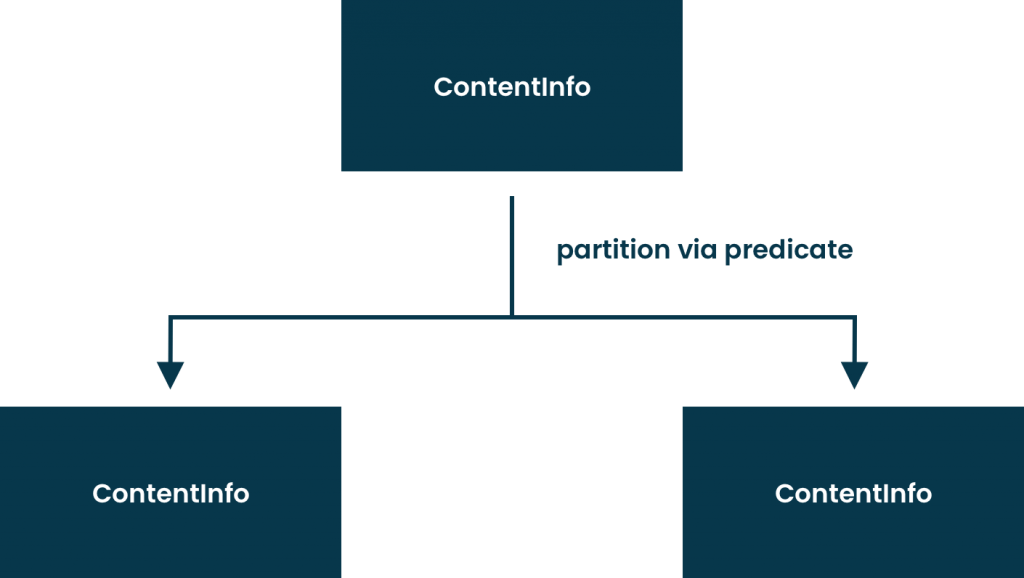

}Inside of this callback we have access to the provided ContentInfo reference, meaning that we can handle any supported attachments for our app – so we’ll need to start by retrieving the URIs of these media attachments so that we can use them. For this, the ContentInfo contains a partition function that can be used to split the provided results based on a given predicate. The result of this function will consist of a pair of ContentInfo references, each containing data that match the predicate followed by those that don’t.

val split = contentInfo.partition { item: ClipData.Item -> item.uri != null }Based on the above example, with this split result we now have two separate ContentInfo references – most importantly, we now know what ones have a valid content URI that we can utilise. In the case of our application, we only care about the first part of the pair – as this contains the ContentInfo that matches our given predicate, meaning that our application can handle these. With this, we’ll be able to access the clipData for each given item inside of the ContentInfo. For examples sake, we’ll go ahead and use the first item, accessing it’s URI property. In a realistic scenario, you’d likely want to loop these and support each item in your application individually.

split.first?.let { uriContent ->

val uri = uriContent.clip.getItemAt(0).uri

}Now that we have the URI for a media item, we need to do something with it! Because this implementation of the OnReceiveContentListener is a class that we will attach to a view reference, we’re going to need a way for this URI data to be passed back to the containing class. For this reason, we’re going to add a callback function to the constructor of the class.

class ContentReceiver(private val contentReceived: (uri: Uri) -> Unit)We’ll then go ahead and utilise this callback, using the URI for our media item as the required URI argument:

split.first?.let { uriContent ->

val uri = uriContent.clip.getItemAt(0).uri

contentReceived(uri)

}Now that we’ve handled the support media URIs in our app, we need to wrap up this class by ensuring that we return the unsupported item from the onReceieveContent function. This is the second item in our partitioned pair, which allows these URIs to be handled elsewhere in places where they may be supported.

override fun onReceiveContent(

view: View,

contentInfo: ContentInfoCompat

): ContentInfoCompat? {

...

return split.second

}With this in place, we have a completed onReceiveContentListener implementation – allowing us to receive and filter media URIs that can be handled by our application.

class ContentReceiver(

private val contentReceived: (uri: Uri) -> Unit

) : OnReceiveContentListener {

override fun onReceiveContent(

view: View,

contentInfo: ContentInfoCompat

): ContentInfoCompat? {

val split = contentInfo.partition { item: ClipData.Item -> item.uri != null }

split.first?.let { uriContent ->

contentReceived(uriContent.clip.getItemAt(0).uri)

}

return split.second

}

}The next step here is plugging in our new implementation so that we can handle these supported media URIs in our application. We’ll go ahead and create a new reference to our ContentReceiver class, providing the required implementation of the contentReceiver callback that we placed in the constructor:

val contentReceiver = ContentReceiver { uri ->

// load image using provided URI

}Within our callback we are taking the provided URI and assigning it to an ImageView within our UI. With this in place, we now need to assign this receiver to a view so that media content can be received via user input. For this we’ll need to use the ViewCompat setOnReceiveContentListener function, which allows us to assign our listener to a view, along with stating the mimeTypes that are supported by it.

ViewCompat.setOnReceiveContentListener(

myEditText,

arrayOf("image/*", "video/*"),

contentReceiver

)We can see here that the setOnReceiveContentListener function takes several arguments:

- view – the view which the listener is to be applied to

- mimeTypes – a string array defining the supported mime types

- listener – the OnReceiveContentListener implementation to handle rich media

With this in place, our provided view (in this case, an edit text) now supports rich media in the form of images/video. Any media that is provided to this view is now handled by our OnReceiveContentListener implementation, with any supported media being pass back to our class via our ContentReceive class calback. With this in place, we can handle rich media content via the paste menu, keyboard and drag-drop in our applications.

In this post we’ve dived into the new unified rich media APIs coming in Android 12. I’m quite excited to use these new features in applications, as well as add them to my own apps for users to enjoy! Is this something you’ll be able to use in your apps, or do you have any thoughts on the functionality? I’d love to hear any comments if so!